The machine will not make mistakes unless the design of the machine is defective or the instructions received are wrong.

In 1916, the Somme River battle broke out. Both sides launched a million-scale positional war in the Somme river area of France. In order to defeat the German army at one stroke, the British and French allied forces secretly transported the new weapon "tank" to the front line and put it into the battlefield. However, due to product design defects and improper operation of soldiers, only 18 of the 49 tanks first transported to the front line were actually put into the battlefield, of which 9 were still trapped in trenches and could not impact the position.

In recent years, as a new species, autopilot also encounters the situation of tanks at that time – the last-minute glitch. Internationally, since Tesla, an intelligent automobile giant, launched the auxiliary automatic driving function, hundreds of traffic accidents have occurred worldwide, resulting in 175 deaths, of which 200 accidents are out of vehicle’s losing control caused by product design defects. Of course, improper human operation also accounts for a good proportion.

So here comes the question: is automatic driving the garden of Eden that benefits mankind or the Pandora's box that endangers the world? How can humans tame this wild horse as Self-driving?

How does autopilot work?

Automatic driving is a relatively new technology and concept. In 1999, the automatic driving car Naclab-V developed by Carnegie Mellon University in the United States completed the first driverless test. Since then, automatic driving has come into reality. However, it was Tesla that really made the concept of automatic driving (assisted driving) popular more than a decade later.

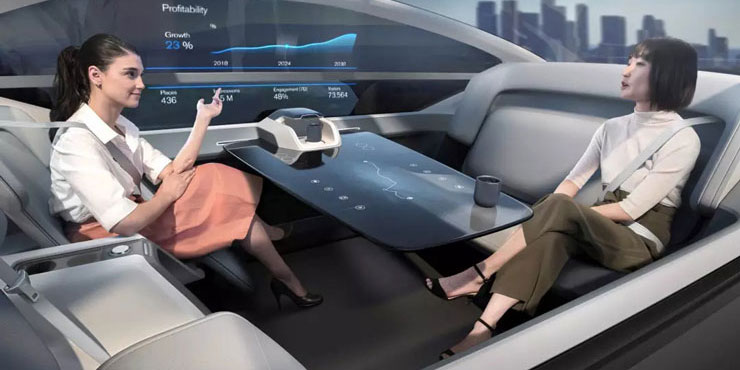

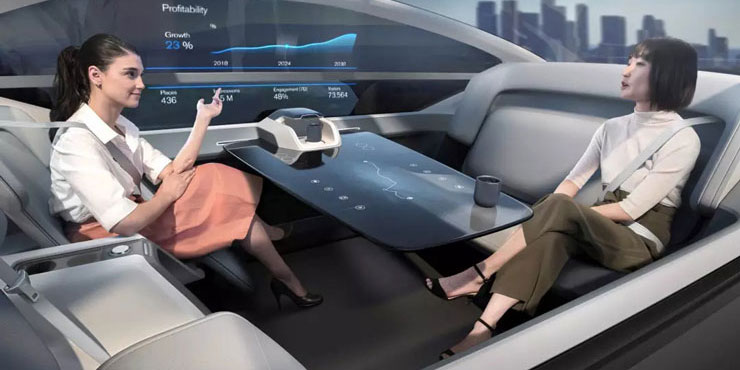

There are 6 levels of automatic driving, and L0 level is completely driven by the driver; L1 level means that the vehicle can assist the driver to complete some driving tasks under specific circumstances; L2 level automated driving can complete some driving tasks, but the driver needs to constantly monitor the changes of the surrounding environment and be ready to take over in case of danger; At L3 level, the driver hardly needs to be ready to take over at all times, and the car can complete all actions independently; L4 and L5 levels are fully automatic driving, and the driver doesn’t need to control the car at all. However, L4 level can be completely independent only under specific conditions such as expressway, while L5 level is established under any conditions, including rainstorm, heavy fog, heavy snow and other complex environments.

Although automatic driving technology is still at its early stage of exploration, experts believe that there are five core components of autopilot: computer vision, sensor fusion, localization, path planning and control.

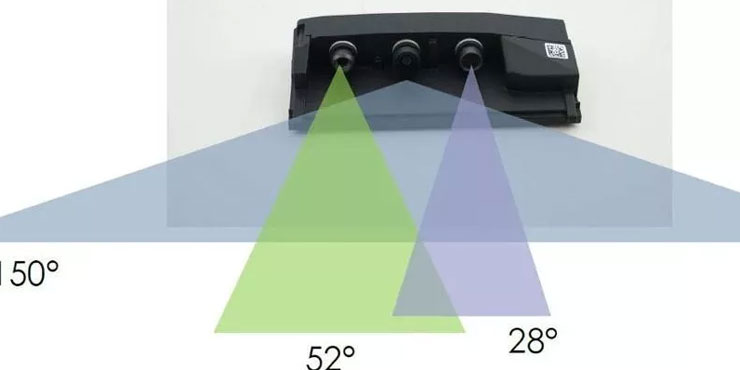

Computer vision is how we use cameras to observe roads. Humans demonstrate the power of vision by processing the car that basically with two eyes and a brain. For autopilot, we can use the camera image to find lane lines or track other vehicles on the road.

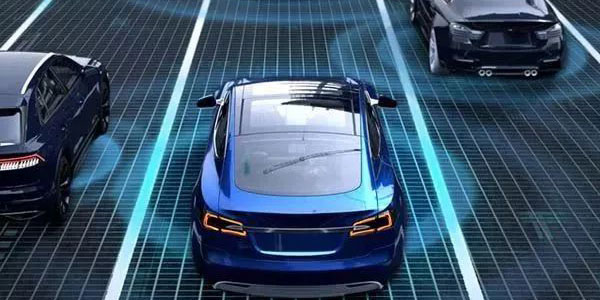

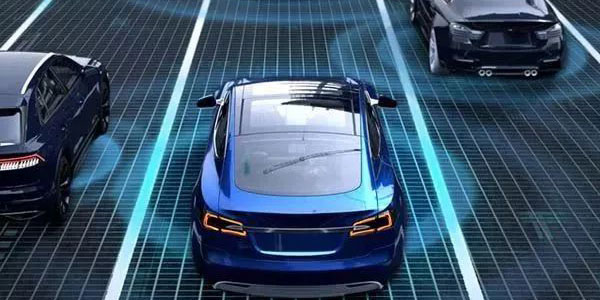

Sensor fusion is how we integrate data from other sensors (such as radar and laser) and camera data to fully understand the vehicle environment. Compared with optical cameras, some measurements such as distance or speed sensors are better, and sensors can also work better in bad weather. By combining all our sensor data, the vehicle can have a deeper understanding of the surrounding environment.

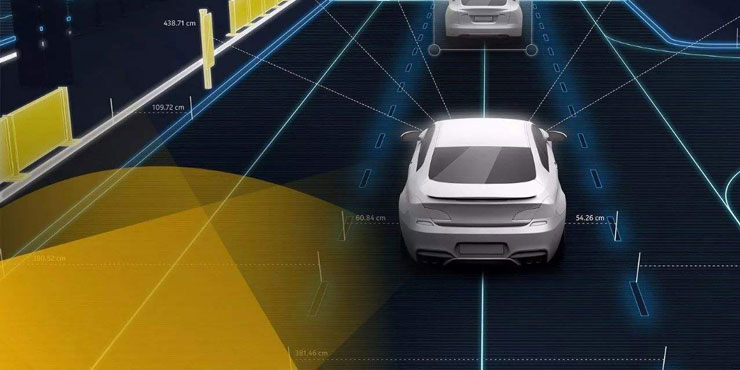

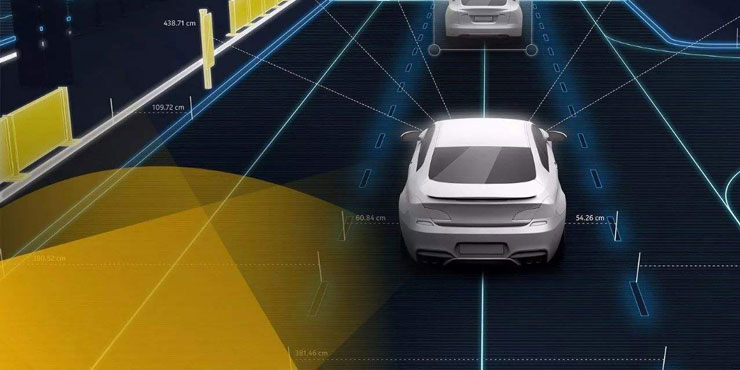

Localization is how to figure out our position in the world, which is the next step after we understand the world. We all have mobile phones with GPS, so it seems that we already know where we are. But in fact, GPS is only accurate to within 1 to 2 meters. Just think about how big 1-2 meters are! If a car goes wrong for one to two meters, it may hit something on the sidewalk. Therefore, we need more complex mathematical algorithms to help the vehicle localize to within 1 ~ 2 cm.

The next step is path planning. Once we know what the world looks like and where we are, in the path planning stage, we can draw the trajectory through the world to reach the place we want to go. First, we predict what other vehicles around us will do. Then we decide which strategy to adopt to deal with these vehicles. Finally, we construct a trajectory or path to perform the operation safely and comfortably, that is, the algorithm.

Control is the last step in the automatic driving cycle. After deciding on the path planning trajectory, the needs to turn the steering wheel, accelerator and brake under the program control to realize automatic driving and reach its destination.

How to operate automatic driving?

At present, there are two main ways to realize automatic driving. One is the Radar School represented by Google. Although optical cameras are also used to help collect driving information, the core is still to rely on radar as the eye of the car; The second is the vision Department represented by Tesla. The cars are also equipped with sensors including radar. They rely on 360-degree optical cameras to perceive the surrounding environment like human eyes. Which is more suitable for automatic driving?

(1) Radar school

Before talking about radar, we can first understand its close relative sonar. The original depth sensing robot was the humble bat (50 million years ago)! Bats (or dolphins) can perform some similar functions, echolocation, also known as sonar (sound navigation and ranging). Sonar does not measure beams such as laser but uses sound waves to measure distance.

After 50 million years of biological exclusivity, World War I pushed forward the timetable for the first large-scale deployment of artificial sonar sensors, the arrival of submarine war. Sonar works well in water, and sound travels much better than light or radio waves (more in a second). At present, sonar sensors are mainly used in cars in the form of parking sensors. These short-range (+ 5m) sensors provide an inexpensive way to know how far the wall is from one’s car. Sonar has not yet been proven to be applicable to the category of automatic driving vehicle demand (60m+).

Radar (radio direction and ranging), much like sonar, is another technology developed in the notorious World War (WWII, this time). Instead of using light or sound waves, it uses radio waves to measure distance. This is a proven method that can accurately detect and track objects as far as 200 meters away.

From the market point of view, there are two types of radars used in the field of automatic driving. One is millimeter wave radar, that is, the radar with the working frequency band in millimeter wave band (the frequency of vehicle radar is usually 76-81GHZ). The ranging principle is the same as that of general radar, that is, to send out radio waves (radar waves), then receive echoes, and measure the target position data according to the time difference between sending and receiving.

The other is lidar, which mainly detects the position, velocity and other characteristics of the target by emitting laser beam. Multiple laser transmitters and receivers are widely used in on-board lidar to establish a three-dimensional point cloud map. The more laser transmitter harnesses, the more cloud points collected per second, and the stronger detection performance is. By generating a huge 3D map, we can achieve the purpose of real-time environmental perception, and even know the lane boundary in advance. Besides, if there are stop signs or traffic lights 500 meters in front, the vehicle will also know the speed and direction of the cyclists or walkers.

However, lidar has always been plagued by price. More wire harnesses mean more expensive the cost of lidar. The price of 64 wire harness lidar is 10 times that of 16 wire harness. For example, the price of 64-bit lidar in Google driverless car is as high as more than 100 thousand US dollars, that is why smart cars with lidar are not applicable at present.

(2)Vision Department

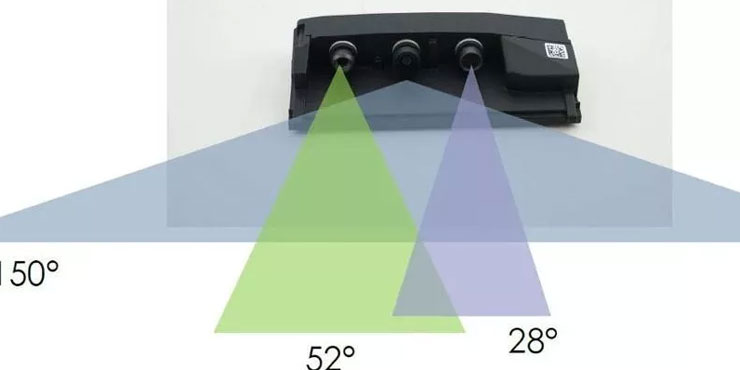

Now let’s focus on the Department of vision, which could be better called Tesla, because it’s Tesla that achieves automatic driving relying on simple optical cameras and human-like vision. Its founder Elon Musk said at the social platform and annual meeting: "the radar scheme on the vehicle is extremely stupid. Anyone or enterprise using the lidar scheme will eventually fail. Their painstaking use of these expensive sensors is itself a trouble." Tesla uses eight cameras to identify objects in the real world (the wild range is 360 degrees, and the monitoring distance can be up to 250 meters). The images obtained by the camera include pedestrians, other vehicles, animals or obstacles. Meanwhile, millimeter wave radar, ultrasonic radar and inertial measurement unit record the current environmental data of the vehicle, then send it to Tesla's autopilot computer. After calculating the algorithm, the autopilot computer transmits the speed and direction information to the steering rudder, accelerator and brake pedal to control the vehicle. However, the content captured by ordinary monocular and binocular cameras are two-dimensional images, which lack depth information, so it’s hard to judge the distance of the object. In this regard, Tesla has also installed a forward-looking three-eye camera to judge the distance of the object by comparing the differences between the images of the two cameras through the on-board processor, and then conduct perception, segmentation, detection, tracking and other operations on the input image, which requires the real mace of the vision system - automatic driving algorithm.

Tesla has a special deep-learning neural network HydraNet, which contains 48 different neural networks that can output 1000 different prediction tensors. Theoretically, Tesla's super network can detect 1000 objects at the same time. It is worth noting that Tesla can also predict the depth information of each pixel in streaming video through the algorithm, which means that as long as the algorithm is advanced and strong enough, the depth information captured by visual sensors can even exceed that of lidar.

However, optical camera is not tolerable to some environment. In the harsh environments such as fog, rain and snow, sand dust and so on, the information captured is few and inaccurate. Compared with the all-weather-capable characteristics of lidar, the visual school is at a disadvantage at present. And the price of lidar is also decreasing, it may be lidar that can tame the wild horse eventually.